Distributed systems and mersit, a Tiny Server Redundancy Manager

My previous post on this blog was published by the end of the long-gone month of June. Many things have changed since then, for example, I entered university and was pressed into creating a Facebook account (more or less separate from the rest of my online presence, so don’t look for me, I won’t add you). On that post, I rambled about the recovery from a big server outage that costed 42 hours of tny.im downtime, and over one week of server downtime. I learned my lessons (I doubt BlueVM learned theirs, but that’s a whole other story), and I went forward with what I said I would do: “setting up a new advanced and redundant system” for ensuring tny.im is always up.

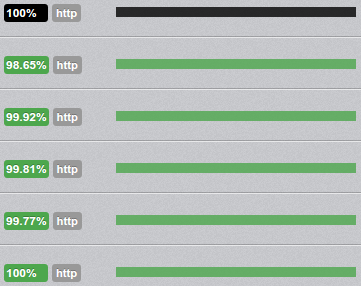

That system has been up and running for over two months now, with varying amounts of servers making the redundancy and load balancing, and a plethora of occasional hiccups. Right now it’s composed of three virtual servers (all from different providers…), but there were times when it was composed of five servers. These three servers are paid, and while they aren’t exactly expensive (but not the cheapest, either), you can imagine the bill, so let’s not talk about tny.im profitability now, OK? (I have kind of given up).

However, having three servers serving the same website, with all three of them being almost a clone of each other (which means, all have the same files and database contents, synced), in a DNS round-robin setup doesn’t directly lead to greater uptime. In fact, I have found out it can lead to more outages, since now the total downtime is approximately the sum of the downtime of each server. Of course, most of these outages are partial (as in, only users unlucky enough to have their DNS request resolved to the IP of a server that is down, will actually perceive the site as down), except for when the MariaDB replication freaks out and basically grinds all database operations, on all servers, to a halt, requiring a complicated manual restart of all MariaDB instances, in a specific order (yes, I have spent many hours searching for an alternative database system, and couldn’t find any that met my requirements).

In order to actually achieve greater uptime, one must have a system that automatically manages the DNS records so that the domain(s) of the website in question never have any records pointing to servers that are down. In other words, the “sheep” must be “hidden from sight” as soon as they go “bad”, and should be put back “in stage” once they become “good”. Being DNS something that was definitely not made for real-time record edits, with many systems caching DNS request results well beyond the specified TTL, this system obviously doesn’t ensure that the “bad sheep” are not invisible to everyone watching the show. But if it manages to do it for even a small percentage of the public, it’s already better than not hiding from anyone (and especially, if it successfully hides the problem from the uptime monitor, that’s even better 🙂 ). This explains why the DNS records for tny.im are set with TTLs of five minutes.

The development of such a DNS record management system was also more or less contemplated in my previous post, when I say:

I’ll take this downtime and new server acquisition as the motivation for setting up a new advanced and redundant system, so that if one server goes down, tny.im (and possibly this blog too) will continue to operate as normal.

And in the end, in a later edit:

On related news, Mirasm – the Tiny Server Redundancy Manager – is mostly finished, only needs some more testing to be put on production servers, managing the new tny.im redundancy system.

“Mostly finished”, as we all know, really means “It’s 99% ready, I only need to figure out the remaining 1% that consists on… everything that is tricky and I’m not sure how it’s done”. This is specially true in this case, as I had high requirements for my manager: it couldn’t use any resources other than the servers I had already (it would’ve been easy to have a separate server just for monitoring and editing DNS as needed, but I didn’t want to pay for yet another server on yet another provider), and it couldn’t fail more than tny.im itself. In fact, the time when the manager has to do more important work, is when it is not working, i.e. when a server goes down and so goes the manager. I finally finished the project, and it works as planned. I only got the name wrong…

Introducing mersit, a Tiny Server Redundancy Manager

Pronounced “m-eh-rs-ee-t”, with the first “e” being like the one in “explain”, mersit is a simple Python script (Python 2.7, because I wasn’t sure what libraries were available for 3.x nor if my servers would run it well) hacked together with some sections that definitely look like spaghetti code. The good news is, it works fine, and has been well tested, so if you study it in the “black box” way, there are no big problems with it.

The purpose of the script is to manage the DNS records of the website served by the group of synced servers, in this case, tny.im. It runs on each server, in a peer-to-peer fashion. The peers select a single master, that will monitor all the peers and manage the DNS as they go up and down, “deciding who’s on stage”, and all peers will check whether the master is up, and select a new one that will edit the DNS to “hide the master from the public” when it goes down.

I definitely want to open-source mersit at some point, but not now because it’s not ready for prime-time (see “spaghetti code”, above), and I want to change some things that will make it more general-purpose. mersit has been managing the live records for tny.im for the past week (it’s been peaceful).

I have gone so far as to write a read-me for mersit (mainly for me to read, as I know I’ll forget how it works within six months). I think it’s best if I put the start of the read-me here, instead of trying to explain it all, once again:

mersit - Tiny Redundant Server Manager Copyright 2014 tny. internet media This version is customized for tny.im This is a Python 2 script that manages a group of computers/servers/thin clients/machines in a network (local- or wide-area), by automatically executing actions when something relevant happens to one of the machines. We'll call the "machines" "peers". mersit assumes all peers and the network are trusted. The script is meant to be run directly on the peers that are to be controlled, in a setup where there is not a single point of failure. It is not of much use when run in a single peer; in the context of this script, a "group" only starts to make sense when it has over one element. We'll refer to this script as "controller software" or simply "controller", and to the other software that runs on a peer and which is to be monitored as "application". The controller is made to run unattended, even though it accepts commands (issued by an "operator") to trigger certain behavior manually. The "something relevant" mentioned in the first paragraph consists on one of these "events of interest": - A peer goes "online", that is, it is reachable by other peers and reports the status of its controller software as "OK" or "ready"; - A peer goes "offline", that is, it is either not reachable by at least some peers, or the controller is reporting its state as "not good" or "not ready"; - A peer becomes good-for-work (GFW), which means, that the application is functioning properly and performing its function (such as listening for incoming connections, data to process, etc.); - A peer becomes not-good-for-work (NFW), in which case the application is not functioning properly, is too busy to perform its function (over capacity), or is otherwise unavailable. Each peer works in a given "domain", which is the group the peer belongs to. The domain is specified by a name and secret which act basically like a username and password pair. Peers will only communicate with other peers of the same domain, that is, peers where the domain name and secret are the ones the controller is configured to use. The domain acts as the authentication element; an external party can not join, communicate or perform actions in a domain unless it knows the name and password used by the peers of the domain. (Please note, that communication between peers is not encrypted by the controller - it goes completely plain-text over the network. It is possible to secure the communication between peers using external tools; such secure functionality goes beyond the scope of this software. The "domain" is simply a basic authentication system, implemented using HTTP authentication, to ensure that peers of a certain group don't start talking with peers from other groups. The basic authentication system is enough to protect against the casual script-kiddie, but by no means adequate for protection from a malicious party in an untrusted/open network) The controller on each peer must know _a priori_ (i.e. before it starts) about where to find at least some of the controllers on other peers. Peer discovery doesn't happen automatically, however, once a peer's controller can communicate with another controller, it will add every controller in the "contact list" of the latter to its "contact list". Imagine the following situation: you have peers A, B and C (and their controller software). The controller in A only knows about peer B. The controller in B only knows about peer C. If you start the controller on peer A, then start the controller on peer B, peer A will tell peer B about its existence, and peer B will tell peer A about the existence of a peer C (independently of peer C being running/reachable). However, if the controller in A knew about no peer (other than itself), it would never find peer B or C even if their domain settings all matched. Even though a big domain can be bootstrapped from just two peers, to ensure good operation, all controllers should know about all peers. This way, if the controller on a peer resets for some reason, it will have a greater chance of reaching another peer. The "contact list" is the list of "known" peers. The controller keeps three lists of peers in memory: the "known" peers, the "reachable" peers, and the "GFW" (good-for-work) peers. The list of known peers is initialized from the source code's configuration section when the controller starts. It then proceeds to see which peers are "reachable", that is, can be reached through the network, are in the same domain (not being in the same domain gives the same effect as not being reachable over the network) and have their controller software report its state as "OK". This initial status checking includes the exchange of some other information about the controller. Once this initial peer identification is done, the controller enters a monitoring loop where it will keep the contents of the three lists up-to-date. The controller keeps running this infinite loop throughout most of its lifetime. How the lists are kept up-to-date and what happens when their contents change is something that depends on the current controller mode. There are two possible modes for controller operation: master and non-master. There is exactly one controller in master mode per domain, and this controller is usually called "the master" (the master peer has the controller in master mode). The differences between the modes are mostly related to what happens in the monitoring loop, but before going into those differences, it is important to understand how the controllers decide which peer is the master peer. When a controller starts and there are no reachable peers, it promotes itself to master, since there must be exactly one master per domain. Later, when another controller joins the domain (either because it started or because it went online after e.g. a period without connections or power), it checks which peers are reachable from its "known" list and "asks" them which is the master peer. Every peer should reply with the same peer, in which case the new controller assumes that peer is the master, and informs the master about its existence, to account for the fact that the new peer may not be in the master's "known" list. However, and especially on domains where not all peers initially know about every other peer, it's possible that a "head split" occurs and there are two masters in the same domain. Imagine a domain where there are four peers D, E, F and G. D only knows about E, which in turn only knows about D. F doesn't know about any peer, and we'll leave G aside for now. All peers are offline. The D controller starts up, sees it can't reach the only peer it knows (E), so calls itself master. The E controller starts up and reaches D, D says it is the master, E assumes D is master, all is fine. The F controller starts up, sees it can't reach any peer because its "known" list is empty, so calls itself master and sits quietly waiting for someone to contact it, which in turn would let it know about more peers. We now have the following situation ([M] represents a controller in master mode, --- represents the knowledge peers have of each other): -DOMAIN------------------ | | | D[M]-----E F[M] | | | ------------------------- Things could be like this forever, and no conflicts would occur - however, this is probably not a domain you want to have, since F doesn't know about any "event of interest" related to D or E, and these two don't know about any events related to F. In this situation, D--E and F act like separate domains. Assume that G is a peer which knows about D, E and F, and that its controller starts up, contacting D, E and F. The first two will agree that D is the current master, but F will disagree and say it is the master. At this point we have a conflict. There are many ways to solve this, including some form of "voting" (e.g. the peer the largest amount of the peers say is the master effectively becomes it), but mersit solves this in a simpler way. The controller checks that everyone in the domain agrees on what peer is the master on every iteration of the monitoring loop. It does this by "asking" each peer in the list of known peers who is the master. The first peer asked is free to reply with any peer. The ones that are asked next must agree with the first one. If not, the controller that was doing the loop tells each disagreeing peer that the actual master, is the one from the first peer's reply. It is possible that a minority is asked first, and thus everyone is forced to "change its opinion" to that of the minority. This is not a problem - mersit assumes all peers are trusted. Note that it can sometimes take some iterations of the monitoring loop for all peers to settle on a single master, because two (or more) peers may be trying to "change the opinion" of the other peers to different masters. This is not a problem either, because even if this kind of concurrency conflict happens once or twice in a row, it will stop happening as soon as one peer is faster than the other to tell everyone (including the other peer(s) that are trying to "change opinions"). What matters is that in the end, every peer knows about all others, and there is a single master. In this case, it could be D: -DOMAIN-------------------- | | | D[M]-----E-----F-----G | | | --------------------------- If the master becomes unreachable, or its controller stops working, the other peers will also find themselves a new master, by sorting the list of reachable peers alphabetically and choosing the first peer in the sorted list. Of course, if for some reason the list is not consistent across peers, the peers will try to "convince" others to settle on who they "think" is the master as previously explained, until everyone is set to the same master. Being the master essentially changes what happens in the monitoring loop. When a controller is in master mode, it is responsible for updating the list of "reachable" and "GFW" peers, by checking which peers are reachable (both in terms of network and in terms of functioning controller) and which have the application in a working condition. If there are changes in the lists that indicate an event of interest, it runs the appropriate handler. If, for example, a peer becomes NFW due to a problem in the application, it will stop being in the GFW list, and the handler function for when a peer leaves that list will be run with the peer in question as the argument. If the master becomes unreachable (network error, controller error, etc.), a new master will be found, as explained in the previous paragraph, and the new master is responsible for running the handler with the previous master as argument. When a peer is not master, it won't run any handlers for events of interest, and it is not responsible for updating the "reachable" and "GFW" lists - it will retrieve these from the master. The controllers on all peers need to keep their lists up-to-date, sharing a "vision of the domain" similar to that of the master, so that any peer can become a master instantly in case of necessity, without having to spend time performing checks on all peers and ensuring it has the best-and-latest list of "known" peers. The operator can manually tell a controller to become the domain's master. When the appropriate command is issued, the controller will send a command to every other controller instructing them to switch to the new master. This command may not always have an effect in some controllers, because while the first controller is sending the commands, other controllers are seeing if everyone agrees on who's the master, and issuing the same commands with another master in mind. This is a sequence of events picturing the situation, in a domain where there are three peers H, I and J, and H is the initial master: 0. ... 1. Peer H checks that every controller agrees it is the master (all agree); 2. Peer I checks that every controller agrees H is the master (all agree); 3. Peer J checks that every controller agrees H is the master (all agree); 4. Operator issues command for peer I to become master; 5. Controller on I assumes it is master, starts sending commands to other peers; 6. Peer H checks that every controller agrees it is the master, before the message from I that I is the new master can get to H; 7. Peer H finds out I (and possibly others) don't agree, sends them commands to change the master back to H; 8. Peer I changes master back to H; 9. Peer I checks that every controller agrees H is the master (all agree); 10. Peer J checks that every controller agrees H is the master (all agree); 11. ... If the master doesn't change when the manual command is issued, it's a matter of trying again. Most often, this kind of concurrency problem does not occur, and even when it does, it does no damage. While it is true that mersit could detect this situation and keep issuing commands automatically until the decision takes effect, we chose to not make it this way to allow the human operator finer control. The primary focus of mersit is to monitor a distributed application. The master checks if the application, or part of the application, running on a certain peer is in working condition by asking that peer's controller about the state of the application it is monitoring. In turn, this controller runs a function, defined by the mersit user in the mersit source code, that should check the application and return True (if application OK) or False (if not). This can involve, for example, making a HTTP request to a HTTP server in that peer to verify it is working. The controller then communicates the status of the application to the master (which may be itself). All this shouldn't take too long, especially when the domain has many servers, as only one peer is asked at a time. If checking the status of the application typically takes over one second, it is best to store the last known status in a variable, and update that state periodically in an asynchronous manner that may be external to the mersit script.

The part related to DNS records is not explained on the read-me, because it is related to the handlers (which each mersit user would customize to the specific needs of the system – as I said, I tried to make it a general-purpose script). Sounds interesting? Feel free to ask questions, or point out problems, in the comments.

Comments